Measurement Model of Advocacy Loyalty – Measured by Overall Satisfaction, Likelihood to Recommend and Continue Buying

Companies with customer experience management (CEM) programs rely heavily on customer feedback in making business decisions, including, setting strategy, compensating employees, allocating company resources, changing business processes, benchmarking best practices and developing employee training programs just to name a few. The quality of the customer feedback directly impacts the quality of these business decisions. Poor quality feedback will necessarily lead to sub-optimal business decisions. High quality feedback will lead to optimal business decisions. We typically measure quality of subjective ratings by two standards: reliability and validity.

To understand reliability and validity, you need to employ the use of psychometrics. Psychometrics is the science of educational and psychological measurement and is primarily concerned with the development and validation of measurement instruments like questionnaires. Psychometricians apply scientifically accepted standards when developing/evaluating their questionnaires. The interested reader can learn more about reliability and validity by reading Standards for Educational and Psychological Testing.

I recently wrote about assessing the reliability of customer experience management (CEM) programs. Reliability refers to the degree to which your CEM program delivers consistent results. There are different types of reliability, each providing different types of insights about your program. Inter-rater reliability indicates whether different customers who receive the same service (e.g., same Contacts within an Account) give similar feedback. Test-retest reliability indicates whether customers give similar ratings over time. Internal consistency reliability indicates whether an aggregated score (average of different items/questions) makes statistical sense. While assessing the reliability of your CEM program is essential, you need to evaluate the validity of your CEM program.

Validity

Validity refers to the degree to which customer ratings generated from your CEM program measure what they are intended to measure. Because of the subjective nature of customer feedback ratings, we rely on special types of evidence to determine the extent to which ratings reflect underlying customer attitudes/feelings. We want to answer the question: “Are we really measuring “satisfaction,” “customer loyalty,” or other important customer variables with our customer feedback tool?

Unlike reliability, there is no single statistic that provides an overall index of the validity of the customer ratings. Rather, the methods for gathering evidence of validity can be grouped into three different approaches: content-related, criterion-related, and construct-related. When determining the validity of the customer ratings, you will likely rely on a variety of these three methods:

1. Content-related Evidence

Content-related evidence is concerned with the degree to which the items in the customer survey are representative of a “defined universe” or “domain of content.” The domain of content typically refers to all possible questions that could have been used in the customer survey. The goal of content-related validity is to have a set of items that best represents all the possible questions that could be asked of the customer.

For customer feedback tools, the important content domain is the possible questions that could have been asked of the respondents. Two methods can be used to understand the content-related validity of the customer survey. First, review the process by which the survey questions were generated. The review process would necessarily include documentation of the development of the survey questions. Survey questions could be generated based on subject matter experts (SMEs) and/or actual customers themselves. Subject matter experts (SMEs) typically provide the judgement regarding the content validity of the feedback tool. Second, a random sample of open-ended comments from respondents can be reviewed and summarized to determine the general content of these verbatim comments. Compare these general content areas with existing quantitative survey questions to discern the overlap between the two. The degree to which customers’ verbatim comments match the content of the quantitative survey questions indicates some level of content validity.

While there are many potential questions to ask your customers, research has shown that a only a few general customer experience (CX) questions are needed for a customer survey. These general CX questions represent broad customer touchpoints (e.g., product, customer support, account management, company communications) across the three phases of the customer lifecycle (marketing, sales and service).

2. Criterion-related Evidence

Criterion-related evidence is concerned with examining the statistical relationship (usually in the form of a correlation coefficient) between customer feedback (typically ratings) and another measure, or criterion. With this type of evidence, what the criterion is and how it is measured are of central importance. The main question to be addressed in criterion-related validity is how well the customer rating can predict the criterion.

The relationship between survey ratings and some external criteria (not measured through the customer survey) is calculated to provide evidence of criterion-related validity. This relationship between survey ratings and some external criterion is typically index using the Pearson correlation coefficient, but other methods for establishing this form of validity can be used. For example, we can compare survey ratings across two knowingly different groups that should result in different satisfaction levels (reflected by their survey ratings). Specifically, satisfaction ratings should vary across different “Service Warranty Levels” and “Account Sizes.” The different treatment of various customer groups should lead to different customer satisfaction results. In these types of comparisons, Analysis of Variance (ANOVA) can be employed on the data to compare various customer groups. The ANOVA allows you to determine if the observed differences across the customer segments are due to chance factors or are real and meaningful.

3. Construct-related Evidence

Construct-related evidence is concerned with the customer rating as a measurement of an underlying construct and is usually considered the ultimate form of validity. Unlike criterion-related validity, the primary focus is on the customer metric itself rather than on what the scale predicts. Construct-related evidence is derived from both previous validity strategies (e.g., content and criterion). The questions in the survey should be representative of all possible questions that could be asked (content-related validity) and survey ratings should be related to important criteria (criterion-related validity).

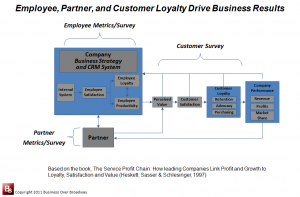

Service Delivery Model Highlights the Expected Relationships Among Different Organizational Variables

Because the goal of construct-related validity is to show that the survey is measuring what it is designed to measure, theoretical models are implicitly used to embed the constructs being measured (e.g., satisfaction, loyalty) into a framework (see Service Delivery Model). In customer satisfaction research, it is widely accepted that customer satisfaction is a precursor to customer loyalty. Consequently, we can calculate the relationship (via Pearson correlation coefficient) between satisfaction ratings and measures of customer loyalty questions (overall satisfaction, likelihood to recommend).

A high degree of correlation between the customer ratings and other scales/measures that purportedly measure the same/similar construct is evidence of construct-related validity (more specifically, convergent validity). Construct-related validity can also be evidenced by a low correlation between the customer ratings (e.g., customer loyalty) and other scales that measure a different construct (e.g., customer engagement) (more specifically, discriminant validity).

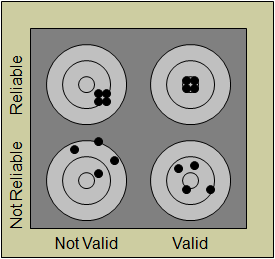

Difference Between Reliability and Validity

Reliability and validity are both necessary elements to good measurement. The figure to the right illustrates the distinction between two criteria for measurement. The diagram consists of four targets, each with four shots. In the upper left hand target, we see that the there is high reliability in the shots that were fired yet the bull’s-eye has not been hit. This is akin to having a scale with high reliability but is not measuring what the scale was designed to measure (not valid). In the lower right target, the pattern indicates that there is little consistency in the shots but that the shots are all around the bull’s-eye of the target (valid). The pattern of shots in the lower left target illustrates low consistency/precision (no reliability) and an inability to hit the target (not valid). The upper right pattern of shots at the target represents our goal to have precision/consistency in our shots (reliability) as well as hitting the bull’s-eye of the target (validity).

Applications of Reliability and Validity Analysis

The bottom line: a good customer survey provides information that is reliable, valid and useful. Applying psychometric standards like reliability and validity to customer feedback data can help you accomplish and reveal very useful things.

- Validate the customer feedback process: When CEM data are used for important business decision (e.g., resource allocation, incentive compensation), you need to demonstrate that the data reflect real customer perceptions about important areas. Here is a PowerPoint presentation of a validation report.

- Improve account management: Reliability analysis can help you understand the extent of agreement between different Contacts within the same Account. Your account management strategy will likely be different depending on the agreement between Contacts within Accounts. Should each relationship be managed separately? Should you approach the Accounts with a strong business or IT focus?

- Create/Evaluate customer metrics: Oftentimes, companies use composite scores (average over several questions) as a key customer metric. Reliability analysis will help you understand which questions can/should be combined together for your customer metric. Here is an article that illustrates reliability and validity in the context of developing three measures of customer loyalty.

- Modify surveys: Reliability analysis can help you identify which survey questions can be removed without loss of information; Additionally, reliability can help you group questions together that make the survey more meaningful to the respondent.

- Test popular theories: Reliability analysis has helped me show that the likelihood to recommend question (NPS) measures the same thing as overall satisfaction and likelihood to buy again. – see Customer Loyalty 2.0. That is why the NPS is not better than overall satisfaction or continue to buy in predicting business growth.

- Evaluate newly introduced concepts into the field: One of my biggest pet peeves in the CEM space is the introduction of new metrics/measures without any critical thinking behind the metric and what it really measures. For example, I have looked into the measurement of customer engagement via surveys and found that these measures literally have the exact same questions as our traditional measures of customer loyalty (e.g., recommend, buy again, overall satisfaction (please see Gallup’s Customer Engagement Overview Brochure for an example). Simply giving a different name to a set of questions does not make it a new variable. In fact, Gallup says nothing about the reliability of their Customer Engagement instrument.

Summary

The ultimate goal of reliability and validity analyses is to provide qualitative and quantitative evidence that the CEM program is delivering precise, consistent and meaningful scores regarding customers’ attitudes about their relationship with your company. Establishing the reliability and validating the CEM program needs to be one of the first research projects for any CEM program. A validation study of the CEM program builds confidence that such decisions lead to improved customer loyalty and business success. Validation efforts described here provide an objective assessment of the quality of the program to ensure the data reflect reliable, valid and useful customer feedback.

References (if you have a desire to learn about important measurement principles or suffer from insomnia)

Allen, M.J., & Yen, W. M. (2002). Introduction to Measurement Theory. Long Grove, IL: Waveland Press.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297-334.

Beyond the Ultimate Question

Beyond the Ultimate Question Measuring Customer Satisfaction and Loyalty (3rd Ed.)

Measuring Customer Satisfaction and Loyalty (3rd Ed.)

I have given up on all customer surveys as nonsense ! most questions are poorly related to the area in question ,and to give number scores for subjectibe experiences is a total violation. most are too long and request service over quality

for example , with respect to my physicians the questions i recieve seem to make it look like i was ordering a pizza instead of having surgery these surveys are a sign of a greater social ill !