This is Part 1 of a series on the Development of the Customer Sentiment Index (see introduction here). The CSI assesses the degree to which customers possess a positive or negative attitude about you. This post covers the measurement of customers’ attitudes and the development of empirically-derived sentiment lexicons.

I was invited to give a talk at the Sentiment Analysis Symposium (in 2012) on the use of sentiment analysis in the measurement/prediction of customer satisfaction and loyalty. There was one problem. I am not expert in sentiment analysis. I have never had the need to apply the principles of sentiment analysis to customer feedback. Until now. The work presented below was inspired by this talk.

Measuring Customers’ Attitudes using Structured and Unstructured Data

You can measure customers’ attitudes in two ways. One way to measure customer satisfaction, for example, is through structured questions that require a rating from the customers (the method I use). While there are different ratings scales (1 to 5, 0 to 10, 1 to 7), the underlying premise of all of them is that the value of the rating scale is used to infer each customer’s level of satisfaction. Lower ratings indicate lower satisfaction than higher ratings (e.g., 0 = Extremely Dissatisfied to 10 = Extremely Satisfied). Customers convey their satisfaction through the ratings they choose to provide; customers who give higher ratings (say, a rating of 10) are more satisfied (have a more positive attitude) than customers who give lower ratings (say, a rating of 3).

Another way to measure attitudes is to apply sentiment analysis to unstructured text regarding what customers say about you. Formally, Wikipedia says “sentiment analysis (also known as opinion mining) refers to the use of natural language processing (NLP), text analysis and computational linguistics to identify and extract subjective information in source materials.” Unlike the use of structured questions and ratings, in sentiment analysis, algorithms are used to assign a numerical value to what customers say. This numerical value represents the customer’s attitude (from positive to negative) about you.

In a Word

I crafted a question that I use in customer surveys for my clients. This question asks the customer (respondent) to provide one word that best describes the company’s products/services. Customers are free to write anything for their response. The question is:

What one word best describes this company’s products/services?

Figure 1. Word cloud of responses from customer survey using the question, “What one word best describes this company’s products/services?

The impetus of this question was purely artistic. I use the responses to this question to generate word clouds. A word cloud is a collection of words such that the size of the words is associated with the frequency with which the word is used. Words that are used more frequently by customers appear larger than less frequently used words. As you can see, the word cloud (see Figure 1 for an example) provides a good high-level picture of how customers feel about you. Some of my clients use their word cloud in company-wide presentations, introductions to survey results and marketing collateral.

Then I had a thought. What if I apply sentiment analysis principles to these words? Each word could be assigned a specific sentiment score that represents the customers’ sentiment of you. This sentiment score could then be used in predictive modeling to improve decision making.

I looked into sentiment lexicons.

Sentiment Lexicons

A sentiment lexicon is a list of words that reflect an opinion on a negative-positive continuum. Each word in the sentiment lexicon is assigned a value that reflects the degree to which the word is positive or negative.

Developing Sentiment Lexicons

I wanted to develop a sentiment lexicon from scratch. I took two approaches: An empirically derived approach and a human judgement approach. In the empirical approach, an algorithm is used to assign a sentiment value to each word based on analysis of existing data. Assigning sentiment values to words is purely a mathematical exercise. Data from online review sites, like IMDB and Goodreads, can be used because each review represents: 1) an overall quality rating (1 to 10 stars) of a movie (book) and 2) a written review of the movie (book). Assigning a sentiment value to a specific word is done by calculating the average rating that was given whenever the specific word appears.

In the human judgement approach, subject matter experts are tasked to assign sentiment values to each word.

For the remainder of this post, I will describe the approach I took in developing empirically-derived sentiment lexicons. I will describe the judgment-based approach in the next post.

Empirically based sentiment

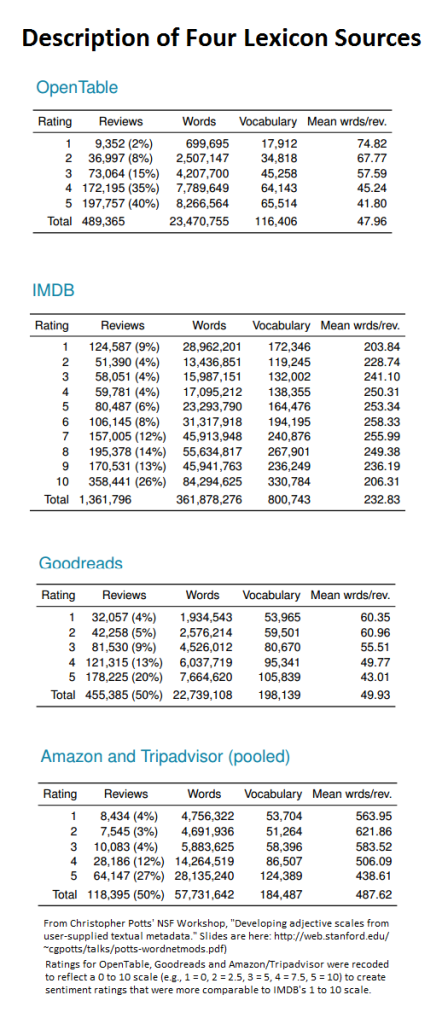

I emailed Christopher Potts (I attended his tutorial on sentiment analysis at the aforementioned symposium) about my desire to develop sentiment lexicons, and he was kind enough to send me a few links to data sets (2011) from four different corpora: Goodreads, IMDB, OpenTable and Amazon/Tripadvisor. Details of each corpus can be found here.

For each of these four online review sites, customers provide a single overall rating on a standard scale (1 to 5 for OpenTable, Goodreads and Amazon/Tripadvisor; 1 to 10 for IMDB) that reflected measure of goodness (higher ratings mean better quality as perceived by the reviewer). With each rating, customers also provided a written summary of their review.

A description of each of the four data corpora can be found in Table 1.

For each data source, I was able to calculate an average rating for each word. The average rating for a particular word reflects its sentiment value.

Before calculating averages for three of the sources, I transformed the 1 to 5 scale to a 0 to 10 scale (1 = 0, 2 = 2.5, 3 = 5, 4 = 7.5, 5 = 10) to approximate the IMDB rating scale of 1 to 10. This transformation allowed me to more easily compare the different lexicons. Thus, possible sentiment values for each word from Goodreads, OpenTable and Amazon/Tripadvisor can range from 0 to 10. The sentiment values for words from IMDB can vary from 1 to 10. Lower sentiment values represent more negative sentiment. Higher sentiment values represent more positive sentiment.

Distribution of Sentiment Values for Each Sentiment Lexicon

Figure 1 contains the frequency distribution of sentiment values of words within each four data sources. For example, of the 4435 words found in the OpenTable data source, their average sentiment value was 6.73.

We find a few differences among the four sentiment lexicons. The derived sentiment value of words depends on the data source. In paired t-tests (all statistically significant), I found that data sources resulted in different sentiment values. Sentiment values were generally the lowest when based on Goodreads data (mean sentiment = 6.3) and highest when based on Amazon/Tripadvisor data (7.2). OpenTable (6.8) and IMDB (6.8) were in between.

Sentiment values showed less variability for IMDB (standard deviation = .9) than for the other data sources (SD = 1.4). This difference could be due to the sheer sample size of the IMDB source. Sentiment values using the IMDB sample are based on more observations (median number of observations for each word = 45) compared to the other samples (8), thus providing a more reliable metric.

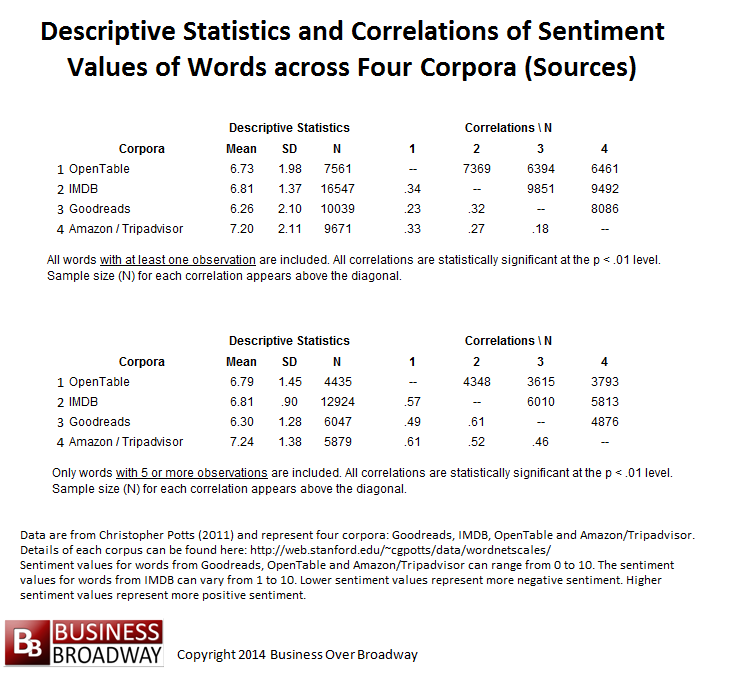

Table 2. Descriptive Statistics and Correlations of Sentiment Values of Words across Four Corpora (Sources)

The descriptive statistics of sentiment ratings for words appears in Table 2, including the mean sentiment value (Mean) across all words, the standard deviation (SD) of the sentiment values and number of words in the lexicon (N).

I also calculated the correlations of sentiment values across words from the different lexicons. For the top part of Table 2, I calculated descriptive statistics for any word that appeared in the data source at least once. For the bottom part of Table 2, I calculated descriptive statistics for any word that appeared in the data, at least, five times.

Sentiment values of words that are based on more observations provide more reliable estimates than sentiment values of words based on a few observations. Results in Table 2 support this conclusion; the average correlation of sentiment scores across data sources using all words was .28. This correlation improves to .54 when I used words with five or more observations. The remaining analyses include only this subset of words.

Context is King

I found that, on average, the four different lexicons only share about 30% of their variance with each other (see correlations in Table 2). That is, the different lexicons are providing different sentiment values for the same words.

Depending on the context of the problem, different words have different sentiments. For example, the word, “predictable,” has a less positive sentiment in the context of movie reviews (IMDB sentiment = 5.39) compared to restaurants reviews (Opentable sentiment = 6.22). Also, the word, “gritty,” may be positive for a book review (Goodreads sentiment = 7.11) but negative for a restaurant review (Opentable sentiment = 4.91). The low correlations among the sentiment values across the different lexicons may simply reflect the difference in context.

A challenge in using sentiment lexicons is to ensure the lexicon you use in your sentiment analysis is appropriate for the task you are trying to solve. In my current situation, I want to use sentiment lexicons for purposes of determining customers’ sentiment of companies and brands. Consequently, I would expect that using lexicons that are based on similar samples would provide better (e.g., more reliable, valid) sentiment values. Therefore, I expect sentiment lexicons based on OpenTable and Amazon/Tripadvisor ratings to be more useful to me than lexicons based on IMDB and Goodreads. I will explore this possibility in the next post. Additionally, I will create a sentiment lexicon based on expert judgment.

Summary

In the process of developing the Customer Sentiment Index (CSI), a new CX metric based on a single-word response from customers, I created four empirically-derived sentiment lexicons to translate words into numerical values of sentiment.

Each sentiment lexicon was based on a different corpus from online review sites. For each review site corpus, the sentiment value of each word was calculated by averaging the ratings that were given by reviewers who used the word in their reviews. Despite using the same methodology to develop the lexicons, there was low agreement in sentiment values for words across different lexicons. This finding suggests that different lexicons will lead to different results. The use of any lexicon needs to consider the sample on which the lexicon was based as well as the target sample on which the lexicon will be used.

In the next post, I will explore the differences of these lexicons and develop another sentiment lexicon based on expert judgment.

Beyond the Ultimate Question

Beyond the Ultimate Question Measuring Customer Satisfaction and Loyalty (3rd Ed.)

Measuring Customer Satisfaction and Loyalty (3rd Ed.)

This article goes beyond the typical post that I have been seeing for the past years. It’s incredible and has real actionable best practices. Also I have found this one http://www.lionleaf.com/blog/getting-customers-via-linkedin/ which gives positive reviews as well but I like most of your ideas very much. Do you have any articles that helps increase exposure of your LinkedIn profile?

Eagerly waiting for the next posts Bob on this Index, really enjoying the knowledge you share.