I wrote about net scores last week and presented evidence that showed net scores are ambiguous and unnecessary. Net scores are created by taking the difference between the percent of “positive” scores and the percent of “negative” scores. Net scores were made popular by Fred Reichheld and Satmetrix in their work on customer loyalty measurement. Their Net Promoter Score is a difference score between the percent of “promoters” (ratings of 9 or 10) and percent of “detractors” (ratings of 0 thru 6) on the question, “How likely would you be to recommend <company> to your friends/colleagues?”

This resulting Net Promoter Score is used to gauge the level of loyalty for companies or customer segments. In my post, I presented what I believe to be sound evidence that mean scores and top/bottom box scores are much better summary indices than net scores. Descriptive statistics like the mean and standard deviation provide important information that describe the location and spread of the distribution of responses. Also, top/bottom box scores provide precise information about the size of customer segments. Net scores do neither.

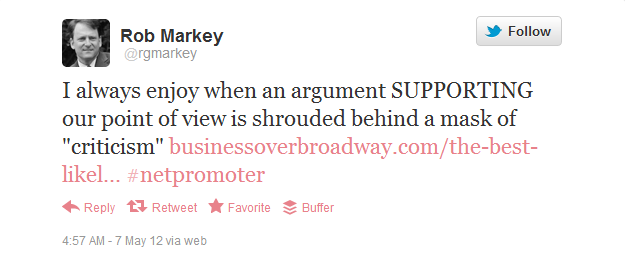

Rob Markey, the co-author of the book, The Ultimate Question 2.0 (along with Fred Reichheld), tweeted about last week’s blog post.

I really am unclear about how Mr. Markey believes my argument is supporting (in CAPS, mind you) the NPS point of view. I responded to his tweet but never received a clarification from him.

So, I present this post as an open invitation for Mr. Markey to explain how my argument regarding the ambiguity of the NPS supports their point of view.

One More Thing

I never deliver arguments shrouded behind a mask of criticism. While my analyses focused on the NPS, my argument against net scores (difference scores) applies to any net score; I just happened to have data on the recommend question, a common question used in customer surveys. In fact, I even ran the same analyses (e.g., comparing means to net scores) on other customer loyalty questions (e.g., overall sat, likelihood to buy), but I did not present those results because they were highly redundant to what I found using the recommend question. The problem of difference scores applies to any customer metric.

I have directly and openly criticized the research on which the NPS is based in my blog posts, articles, and books. I proudly stand behind my research and critique of the Net Promoter Score. Other mask-less researchers/practitioners have also voiced concern about the “research” on which the NPS is based. See Vovici’s blog post for a review. Also, be sure to read Tim Keiningham’s interview with Research Magazine in which he calls the NPS claims “nonsense”. Yes. Nonsense.

Just to be clear, “Nonsense” does not mean “Awesome.”

Beyond the Ultimate Question

Beyond the Ultimate Question Measuring Customer Satisfaction and Loyalty (3rd Ed.)

Measuring Customer Satisfaction and Loyalty (3rd Ed.)

NPS is the same or worse that Customer Satisfaction, period. Tim Keiningham has done stellar work proving the nonsense of its promises. I personally have compared datasets and never found NPS to be relevant to explaining spend or any other behavioural varible in a better way than Satisfaction. What has happend is easy words being used by ignorant people to sell expensive software.